Si je travaille mon SEO, j’ai envie de savoir si cela produit des résultats, donc autant comprendre tout de suite comment mesurer ces résultats grâce aux outils que Google fournit ! La Google Search Console est un outil indispensable et complémentaire à Google Analytics, que nous allons découvrir sans plus attendre !

Update : Google a récemment développé une nouvelle version de la Google Search Console. Et, super nouvelle, notre guide est merveilleusement à jour ! Découvrez plutôt .. :)

Sommaire

- Comment créer un compte Google Search Console ?

- Comment suivre ses performances SEO ?

- Utilisez le rapport de performances pour optimiser votre SEO

- Comment gérer les erreurs d’indexations avec la Google Search Console ?

- L’outil d’inspection d’URL

- Gérer les liens grâce à la Google Search Console

- Comment gérer l’ergonomie mobile de mon site ?

- Allez plus loin avec la Google Search Console

Comment créer un compte Google Search Console ?

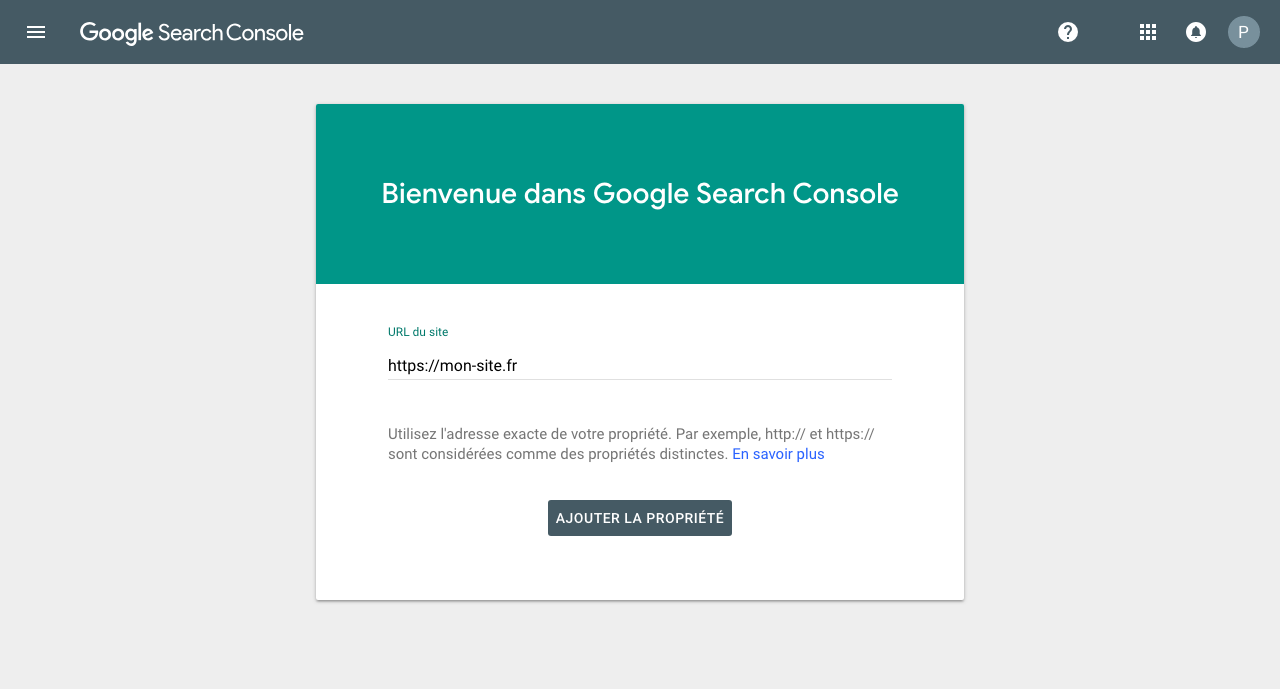

Saisissez l’URL de votre site dans le champs correspondant puis validez en cliquant sur « Ajouter la propriété ».

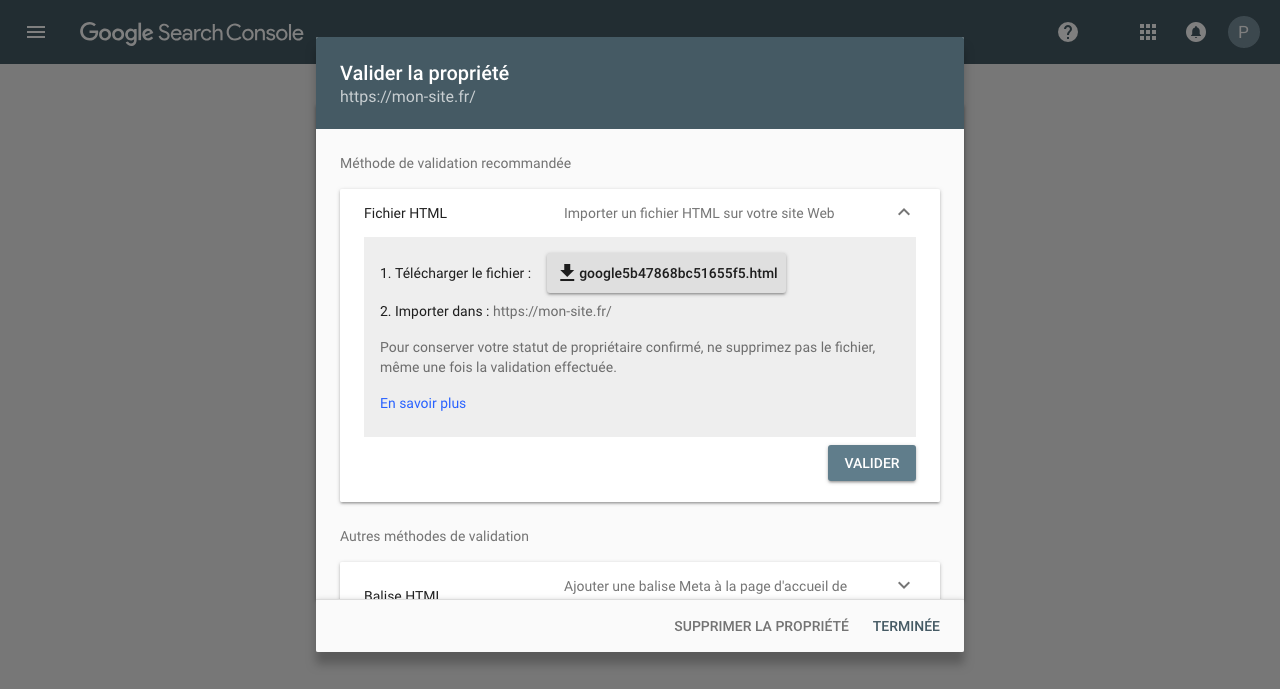

Il existe plusieurs méthodes pour valider la propriété de votre site :

La 1ère méthode affichée par défaut par Google consiste à passer par le fichier HTML (méthode qui consiste à avoir accès au code source du site web).

Pour cela :

- Téléchargez le fichier de validation qui comporte un numéro unique. Enregistrez-le dans vos documents ou sur votre bureau, sans modifier le nom du fichier.

- Transférez le fichier à la racine de votre site.

- Vérifiez que le transfert s’est déroulé correctement. Si tel est le cas, le fichier de validation importé doit pouvoir s’afficher comme l’une des pages web de votre site, dans votre navigateur. L’URL de cette page doit être composée de votre nom de domaine, suivi du nom du fichier de validation. Par exemple : http://www.mon-site.com/googleff2a43992774ce75.html

- Retournez sur l’interface de Google Search Console et validez.

Attention : Ne supprimez pas le fichier de validation à la racine de votre domaine au risque de ne plus pouvoir accéder aux données de la Google Search Console.

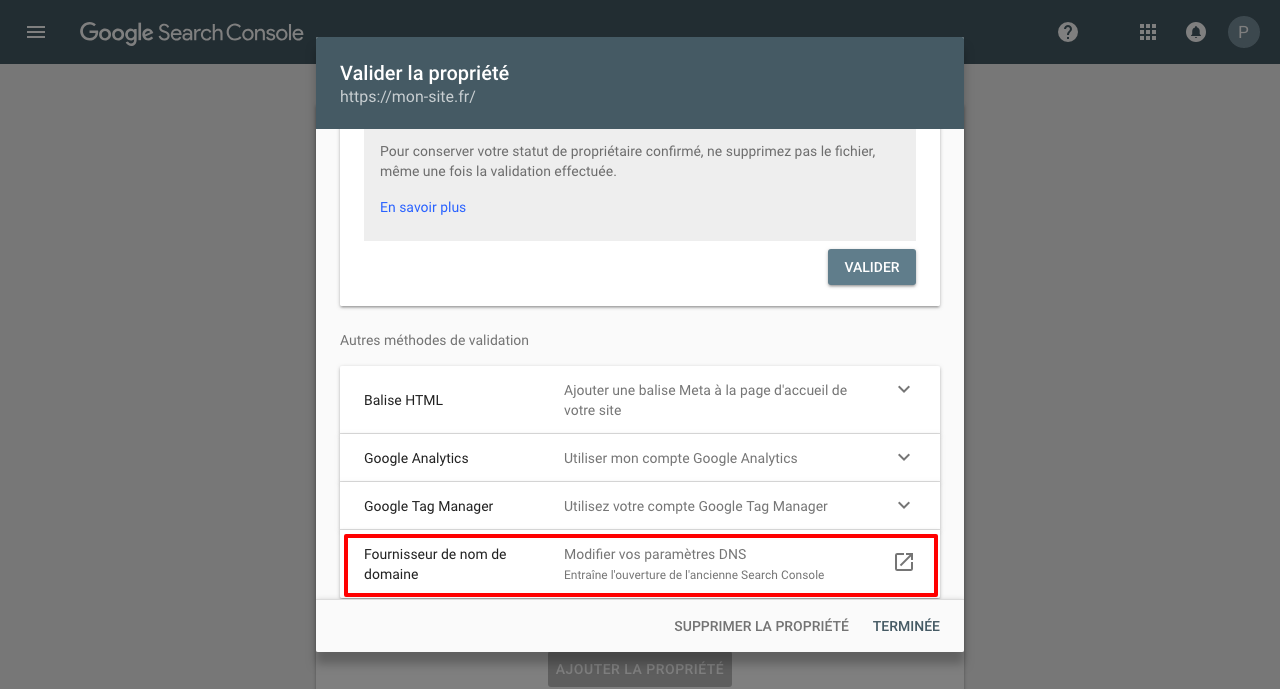

La 2ème méthode consiste à modifier vos paramètres de DNS directement sur votre compte que vous avez chez votre fournisseur de domaine (méthode plus simple).

Sélectionnez « Fournisseur de nom de domaine » comme si dessous > tout en bas du formulaire de validation de propriété.

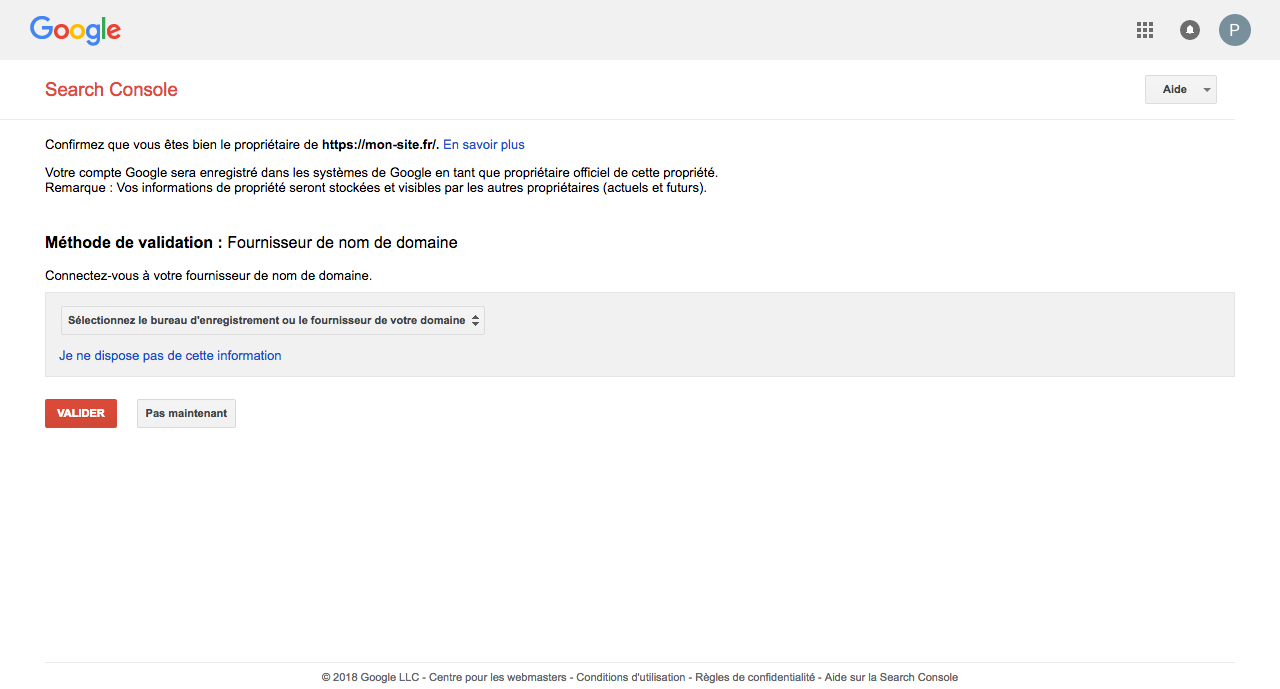

Vous allez être redirigé sur une autre page.

Choisissez votre fournisseur de domaine puis suivez les étapes indiquées par votre fournisseur.

Attention : Si vous possédez plusieurs sites, vous devez réitérer les étapes 3 et 4 autant de fois que vous avez de sites.

N’oubliez pas d’enregistrer votre site sécurisé et non sécurisé et également vos sous-domaines.

Exemple :

- http://mon-site.com

- https://mon-site.com

- https://blog.mon-site.com

- http://blog.mon-site.com

Lier Google Analytics & la Google Search Console

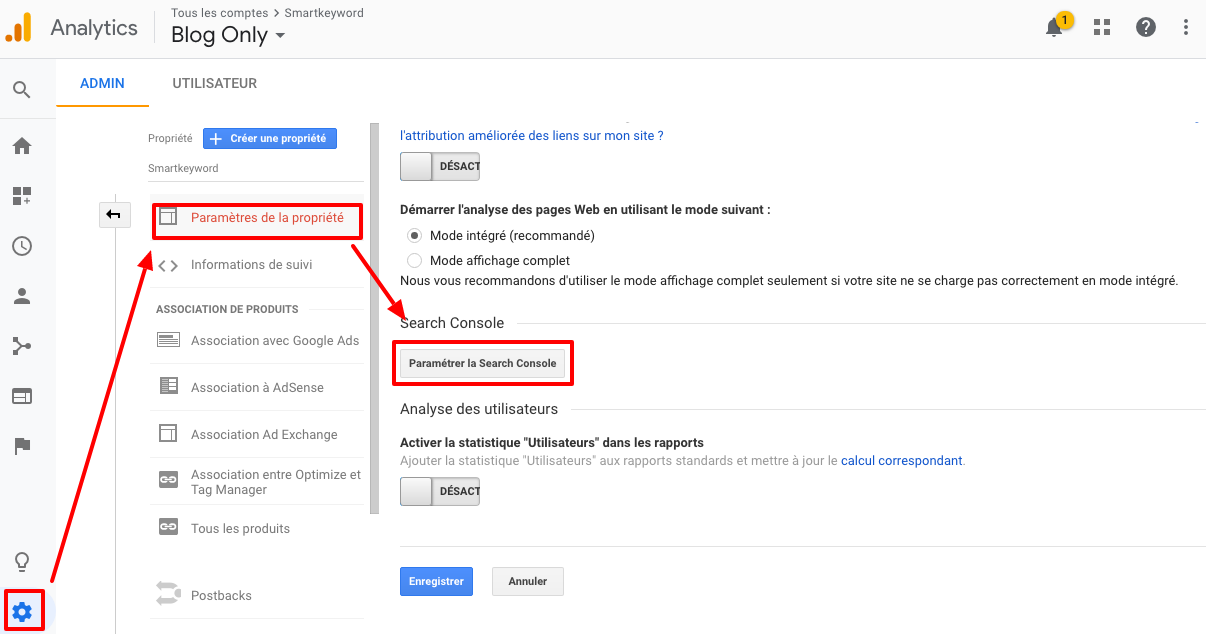

Ouvrez votre Google Analytics et dirigez vous vers les paramètres.

Puis, cliquez sur “paramètres de la propriété” et scrollez jusqu’à Google Search Console et cliquez sur “paramétrer la Search console” → Add → Enregistrer

Voilà votre compte Google Search Console est maintenant configuré, vous pouvez maintenant commencer à optimiser votre site ! ?

Comment suivre ses performances SEO ?

Pour cela, allez dans le rapport “Performances”, vous allez pouvoir suivre l’ensemble de votre trafic, en terme de clics, d’impressions, de CTR (Click through rate) et de position moyenne.

- Le filtre Clics vous montre combien de clics vous avez obtenus dans les résultats de recherche.

- Le filtre Impressions vous montre le nombre de fois dans les pages de résultats Google que les utilisateurs ont pu voir votre annonce. (Google comptabilise ces impressions en fonction de différents facteurs, et il n’est pas forcément utile de tous les connaître).

- Le CTR (Click Through Rate) aussi appelé le taux de clic, est le pourcentage de clic que vos liens génèrent quand ils apparaissent sur les pages de résultat de Google. Cliquez simplement sur « CTR », et hop, vous saurez tout !

- La position moyenne vous permet d’avoir accès à l’ensemble de vos positions sur les mots clés qui vous intéressent ainsi qu’à votre position moyenne. Cela constitue évidemment un autre indice à surveiller étant donné que plus vos positions seront proches de la 1ère position de la 1ère page (le rêve !) plus vous capterez de trafic.

Êtes-vous anormalement mal positionnés sur certaines thématiques ? Vos positions ont-elles tendance à monter, à descendre ? Cliquez simplement sur « Position », et hop, vous saurez tout !

En dessous de ce graphe, vous allez pouvoir filtrer ses données pour affiner vos recherches en fonction :

- Des Requêtes qui vous renvoient à la liste de vos meilleurs mots clés tapés par les internautes qui les ont conduit sur votre site.

- Des Pages qui vous montrent la liste des meilleures pages de votre site qui sont apparues dans les résultats de recherche.

- Des Pays qui vous montrent la liste des pays d’où proviennent toutes les recherches par ordre de grandeur.

- Des Appareils qui vous indiquent quels périphériques ont été utilisés pour la recherche.

- Des apparences dans les résultats qui vous montrent vos résultats enrichis.

?Key take away: Lorsque vous filtrez par pages, vous aurez la liste des meilleures pages affichées dans la SERP. Lorsque vous cliquez sur une page, cela va prendre automatiquement en compte toutes les données provenant de cette page.

Lorsque vous tapez par la suite sur l’option « Requêtes », cela vous présentera l’ensemble des mots clés par ordre de grandeur sur lequel la page en question est positionnée.

Ce rapport vous permet également de filtrer vos données par date et cette data s’étant jusqu’à 16 mois avec cette nouvelle version de la Google Search Console.

Utilisez le rapport de performances pour optimiser votre SEO

Booster vos CTR

Dans ce cas de scénario, nous allons nous focaliser sur les pages ayant un faible CTR mais qui rank c’est à dire qui sont positionnées sur la première page Google.

Ce sont des mots clés qui peuvent vous apporter du trafic !

Pour cela, nous allons nous focaliser sur les mots clés étant positionné entre 1 et 5 mais ayant un CTR inférieur à 4,88%.

Car d’après Advanced Web Ranking, une position 5 a un CTR d’environ 4,88%.

Pour cela, dans “Requêtes”, sélectionnez par CTR en appuyant sur le bouton comme ci-dessous puis filtrez sur “inférieur à 5” :

Assurez vous que “Position” soit toujours sélectionné puis sélectionnez CTR et filtrez sur “ inférieur à 4.88” ( utilisez un . et non une virgule) .

Vous aurez ainsi une liste de mots clés ayant du potentiel !

Choisissez les mots clés qui ont de fortes impressions mais un faible CTR comme dans cet exemple :

Cliquez sur ce mots clés afin de trouver la page correspondante.

Ensuite, jetez un coup d’oeil au mots clés qui rank sur cette page.

Pour cela rien de plus simple, cliquez sur “ + nouveau” dans le rapport de performances et sélectionnez “Pages” pour entrer l’URL de la page concernée.

Vous verrez sûrement que la page à un fort nombre d’impressions mais un faible CTR, ce qui confirme nos soupçons !

Maintenant que vous avez localisé la page, modifiez votre titre et votre meta-description pour augmenter votre CTR.

Après vos modifications, surveillez vos modifications en utilisant le comparateur dans le rapport.

Trouver des mots clés

Toujours dans l’onglet Performances, la Google Search Console est aussi un bon moyen d’améliorer votre liste de nouveaux mots clés pour optimiser votre référencement.

En utilisant le rapport de performances et en quelques étapes vous allez pouvoir trouver des pépites !

Pour cela, rien de plus simple :

- Filtrer les dates à 28 jours.

- Ajuster le filtres des requêtes pour avoir des positions supérieures à 8.

- Trier les mots clés par ordre croissant d’impressions.

Ces mots clés qui génèrent des impressions et qui ne sont pas encore arrivés en 1ère page de Google ou sont à peine en bas de cette 1ère page sont des pépites.

Notez-les afin de les travailler pour améliorer vos positions dessus et ainsi augmenter votre taux de clics pour, au final, générer des ventes.

Comment gérer les erreurs d’indexations avec la Google Search Console ?

Dans la section index du menu, vous trouverez le rapport de couverture pour savoir quelles pages ont été indexées et les corrections à faire pour celles qui n’ont pas pu l’être.

Pour cela, rendez-vous dans la section « Index » du menu, puis dans « Couverture ».

Le rapport de couverture se présente comme suit :

Avec 4 sections : Erreur, Valides avec des avertissements, Valides et Exclues.

Le rapport de pages « Valides »

Nous vous recommandons de commencer par la section « Valides » en vert.

Cette section liste les URLs indexées et valides, c’est-à-dire ne présentant pas d’erreur, ni toute forme d’anomalie auprès de Google.

Tout d’abord, vérifiez le nombre d’URLs total qui s’affiche en haut sous « Valides » (ici 2,3k). Est-ce que cela correspond au nombre de pages de votre site à indexer ? Si ce n’est pas le cas, gardez cela en tête car ces URLs manquantes sont très probablement dans une ou plusieurs des 3 autres sections que nous analysons dans les 3 articles suivants.

Le graphe vous permet également de voir la progression de l’indexation : vous parait-elle cohérente ? Ou à l’inverse inférieure/supérieure à la réalité de création de contenus de votre site ? Idem, gardez cela en tête pour la suite.

Présent dans le sitemap ou non ?

Plus bas, dans la sous-section « Détails », on voit 2 lignes : « Envoyée et indexée » et « Indexée, mais non envoyée via un sitemap »

Si vous avez fait un sitemap qui est à jour et qui contient tout votre contenu indexable, toutes vos URLs valides devraient être dans la section « Envoyée et indexée ».

=> Mais il y a parfois des incohérences à repérer et corriger.

Pour cela, nous allons regarder de plus près la liste des URLs concernées en cliquant sur la ligne correspondante.

Liste des URLs envoyées et indexées

Si la liste contient des URLs qui ne devraient pas être indexées : consulter le(s) sitemap(s) déclarés pour identifier d’éventuelles erreurs puis procédez à la désindexation de ces pages non désirées.

Liste des URLs indexées mais non envoyées via un sitemap

Vous avez des URLs dans cette sous-section parce que :

- le sitemap n’est pas à jour, où qu’il ne contient pas toutes vos URLs, mais uniquement les principales : pas de panique, il faut simplement mettre à jour votre/vos sitemap(s). C’est par exemple le cas de la capture ci-dessus.

- vous n’avez pas de sitemap car la taille de votre site ne justifie pas sa création.

Le rapport d’avertissement

Nous détaillons ensuite ici la section que l’on vous recommande de consulter en 2ème temps « Valides avec avertissements » en orange.

Le rapport suivant vous indique les pages pour lesquelles Google vous émet un avertissement notamment parce qu’elle est indexée mais qu’elle est bloquée par le fichier robots.txt comme vous pouvez le voir ci-dessous.

Explication de l’avertissement

Le robots.txt n’étant pas un outil de désindexation mais de blocage, il est possible que certaines pages soient tout de même visible à Google si un site tiers leur fait un lien.

Interprétation et correction

Cliquez sur la section « Avertissements » :

Puis sur la ligne correspondante de la sous-section « Détails » pour afficher les loste détaillée :

- S’il s’agit de pages devant être indexées : les retirer du robots.tx dès que possible pour permettre leur indexation.

- Sinon, il faut donc dans ce cas retirer ces pages du robots.txt, les désindexer proprement, puis les remettre dans le robots.txt.

Le rapport d’erreurs

Nous détaillons ensuite ici la section que l’on vous recommande de consulter en 3ème temps « Erreur » en rouge.

Il s’agit de la section listant les URLs que Google n’a pas indexées car présentant des erreurs. Contrairement à la section « Exclues », il s’agit ici d’URLs que vous avez choisi d’envoyer à Google via un sitemap, c’est pour cela qu’il vous avertit via cette section d’erreur.

Ici dans cet exemple on peut voir, qu’il y a 131 erreurs qui ont pour cause 2 problèmes.

Cliquez sur la section en rouge « Erreur » :

Dans le tableau en dessous, vous allez pouvoir identifier la cause de l’erreur pour pouvoir la corriger.

Puis sur chaque erreur pour avoir la liste des URLs concernées.

Erreurs techniques

Listons tout d’abord les erreurs de type « technique » :

- Erreur de serveur (5xx) : le serveur n’a pas répondu à une requête apparemment valide.

- Erreur liée à des redirections : la redirection 301/302 ne fonctionne pas.

- URL envoyée semble être une erreur de type “soft 404” : vous avez envoyé cette page pour qu’elle soit indexée, mais le serveur a renvoyé ce qui semble être une erreur de type “soft 404”.

- URL envoyée renvoie une demande non autorisée (401) : vous avez envoyé cette page pour qu’elle soit indexée, mais Google a reçu une réponse 401 (accès non autorisé).

- URL envoyée introuvable (404) : vous avez envoyé une URL pour qu’elle soit indexée, mais celle-ci n’existe pas.

- L’URL envoyée contient une erreur d’exploration : vous avez envoyé cette page pour qu’elle soit indexée, et Google détecté une erreur d’exploration non spécifiée qui ne correspond à aucune des autres raisons.

Pour toutes ces erreurs : corriger l’erreur si la page doit bien être indexée ou la supprimer du sitemap et du maillage interne (des liens internes) sinon.

Erreurs d’indexation

- URL envoyée bloquée par le fichier robots.txt : cette page est bloquée par le fichier robots.txt et envoyée par le sitemap xml en même temps. La retirer du robots.txt ou du sitemap xml en fonction de votre intention de la faire indexer ou non.

- URL envoyée désignée comme “noindex” : vous avez envoyé cette page pour qu’elle soit indexée, mais elle contient une directive “noindex” dans une balise Meta ou un en-tête HTTP. Si vous souhaitez que cette page soit indexée, vous devez supprimer cette balise ou l’en-tête HTTP, sinon, c’est du sitemap qu’il faut la supprimer.

Vous pouvez maintenant corriger vos pages pour qu’il n’y ait plus aucune erreur et qu’elles puissent ainsi être indexées sans problèmes !

Le rapport de pages « Exclues »

Nous détaillons ensuite ici la section que l’on vous recommande de consulter en dernier, « Exclues » en gris.

Il s’agit de la section listant les URLs que Google n’a pas indexer, jugeant cela volontaire de votre part. Contrairement à la section « Erreur », il s’agit ici d’URLs que vous n’avez pas choisi d’envoyer à Google via un sitemap, ce pourquoi il ne peut pas préjuger que c’est une erreur.

Cliquez sur la section en gris « Exclues » :

Ces pages ne sont généralement pas indexées, mais cela semble intentionnel de votre part.

Il est donc intéressant de surveiller la raison de la non-indexation de ces pages, car vous ne voulez pas que Google désindexe une page que vous souhaiteriez indexer !

Listons d’abord les exclues pour raisons techniques :

Causes techniques

Bloquée en raison d’une demande non autorisée (401) : une demande d’autorisation (réponse 401) empêche Googlebot d’accéder à cette page. Si vous souhaitez que Googlebot puisse explorer cette page, supprimez les identifiants d’accès ou autorisez Googlebot à accéder à votre page.

Introuvable (404) : cette page a renvoyé une erreur 404 lorsqu’elle a été demandée. Google a détecté cette URL sans demande explicite ni sitemap. Il se peut que Google ait détecté l’URL via un lien depuis un autre site ou que la page ait été supprimée. Googlebot continuera probablement à essayer d’accéder à cette URL pendant un certain temps. Il n’existe aucun moyen d’indiquer à Googlebot d’oublier définitivement une URL. Toutefois, il l’explorera de moins en moins souvent. Les réponses 404 ne sont pas un problème si elles sont intentionnelles, il faut juste éviter de leur faire des liens. Si votre page a été déplacée, utilisez une redirection 301 vers le nouvel emplacement.

Anomalie lors de l’exploration : une anomalie non spécifiée s’est produite lors de l’exploration de cette URL. Elle peut être causée par un code de réponse de niveau 4xx ou 5xx. Essayez d’analyser la page à l’aide de l’outil Explorer comme Google pour vérifier si elle présente des problèmes empêchant son exploration puis rebouclez avec l’équipe technique.

Soft 404 : la demande de page renvoie ce qui semble être une réponse de type « soft 404 ». Autrement dit, elle indique que la page est introuvable de manière conviviale, sans inclure le code de réponse 404 correspondant. Nous vous recommandons soit de renvoyer un code de réponse 404 pour les pages « introuvables » afin d’empêcher leur indexation et de les enlever du maillage interne, soit d’ajouter des informations sur la page pour indiquer à Google qu’il ne s’agit pas d’une erreur de type « soft 404 ».

Causes liées à un doublon ou une canonique

Autre page avec balise canonique correcte : cette page est un doublon d’une page que Google reconnaît comme canonique. Elle renvoie correctement vers la page canonique. Il n’y a en théorie pas d’action à effectuer auprès de Google, mais nous vous recommandons de vérifier pourquoi ces 2 pages existent et sont visibles par Google afin d’apporter les bonnes corrections.

Page en double sans balise canonique sélectionnée par l’utilisateur : cette page a des doublons, dont aucun n’est marqué comme canonique. Google pense que cette page n’est pas canonique. Vous devriez désigner la version canonique de cette page de manière explicite. L’inspection de cette URL devrait indiquer l’URL canonique sélectionnée par Google.

Page en double, Google n’a pas choisi la même URL canonique que l’utilisateur : cette page est marquée comme canonique, mais Google pense qu’une autre URL serait une version canonique plus appropriée et l’a donc indexée. Nous vous recommandons de vérifier l’origine du doublon (il faudrait peut-être utiliser une 303 plutôt que garder les 2 pages), puis ajouter les balises canonicals qu’il faut pour être précis auprès de Google. Cette page a été détectée sans demande explicite d’exploration. L’inspection de cette URL devrait indiquer l’URL canonique sélectionnée par Google.

Si vous avez ce message sur 2 pages différentes, cela signifie qu’elles sont trop similaires et que Google ne voit pas l’intérêt d’en avoir deux. Imaginons que vous ayez un site de vente de chaussures, si vous avez une page « chaussures rouges » et une page « chaussures noires » qui contiennent peu ou pas de contenu, ou du contenu trop similaire, avec à peine le titre qui change : il faut se demander si ces pages doivent vraiment exister, et si oui, améliorer leur contenu.

Page en double, l’URL envoyée n’a pas été sélectionnée comme URL canonique : l’URL fait partie d’un ensemble d’URL en double sans page canonique explicitement indiquée. Vous avez demandé que cette URL soit indexée, mais comme il s’agit d’un doublon et Google pense qu’une autre URL serait une meilleure version canonique, celle-ci a été indexée au profit de celle que vous avez déclarée. La différence entre cet état et « Google n’a pas choisi la même page canonique que l’utilisateur » est que, dans le cas présent, vous avez explicitement demandé l’indexation. L’inspection de cette URL devrait indiquer l’URL canonique sélectionnée par Google.

Page avec redirection : l’URL est une redirection et n’a donc pas été ajoutée à l’index. Il n’y a rien à faire dans ce cas, si ce n’est vérifier que la liste est correcte.

Page supprimée en raison d’une réclamation légale : la page a été supprimée de l’index en raison d’une réclamation légale.

Causes liées à la gestion de l’indexation

Bloquée par une balise « noindex » : lorsque Google a tenté d’indexer la page, il a identifié une directive « noindex » et ne la donc pas indexée. Si vous ne voulez pas que la page soit indexée, vous avez procédé correctement. Si vous voulez qu’elle soit indexée, vous devez supprimer cette directive « noindex ».

Bloquée par l’outil de suppression de pages : la page est actuellement bloquée par une demande de suppression d’URL. Si vous êtes propriétaire de site validé, vous pouvez utiliser l’outil de suppression d’URL pour voir qui est à l’origine de cette demande. Les demandes de suppression ne sont que valables pendant 90 jours après la date de suppression. Au-delà de cette période, Googlebot peut explorer à nouveau votre page et l’indexer, même si vous n’envoyez pas d’autre demande d’indexation. Si vous ne souhaitez pas que la page soit indexée, utilisez une directive « noindex », ajoutez des identifiants d’accès à la page ou supprimez-la.

Bloquée par le fichier robots.txt : un fichier robots.txt empêche Googlebot d’accéder à cette page. Vous pouvez vérifier cela grâce à l’outil de test du fichier robots.txt. Notez que cela ne signifie pas que la page ne sera pas indexée par d’autres moyens. Si Google peut trouver d’autres informations sur cette page sans la charger, la page pourrait tout de même être indexée (bien que cela soit plus rare). Pour vous assurer qu’une page ne soit pas indexée par Google, supprimez le bloc robots.txt et utilisez une directive « noindex ».

Explorée, actuellement non indexée : la page a été explorée par Google, mais pas indexée. Elle sera peut-être indexée à l’avenir ; il n’est pas nécessaire de renvoyer cette URL pour l’exploration.

Cela arrive assez souvent avec les pages paginées après la 1ère page, car le moteur ne voit pas l’intérêt de les indexer en plus de la première.

Il est également possible que ça concerne un grand nombre de pages très similaires ou de faible qualité, pour lesquelles Google ne voit pas l’intérêt de les indexer. Il faut donc se demander s’il ne vaut pas mieux les désindexer volontairement, sauf si on prévoit de les travailler dans un futur proche.

Détectée, actuellement non indexée : la page a été trouvée par Google, mais n’a pas encore été explorée. En règle générale, cela signifie que Google a tenté d’explorer l’URL, mais le site était surchargé. Par conséquent, Google a dû reporter l’exploration. C’est pourquoi la dernière date d’exploration ne figure pas dans le rapport.

Cela arrive assez souvent avec les pages paginées après la 1ère page, car le moteur ne voit pas l’intérêt de les explorer en plus de la première.

Il est bon également de creuser la piste de la profondeur : lorsque vous avez de nombreuses pages profondes, il est difficile pour le robot de bien crawler votre site, il décide donc d’occulter une partie « inintéressante » du site. Ce problème doit être corrigé au plus vite car il peut affecter la crawlabilité globale du site et donc d’autres pages, qui elles sont cruciales pour votre référencement.

Vous savez tout sur le rapport d’URLs exclues de la Search Console !

Un consultant Smartkeyword peut vous aider à faire l’audit de votre couverture d’indexation n’hésitez pas à nous contacter !

L’outil d’inspection d’URL

L’outil d’inspection vous donne des informations sur une page spécifique : les erreurs AMP (Accelerated Mobile Pages), les erreurs liées aux données structurées et les problèmes d’indexation.

Pour accéder à ce rapport, insérer l’URL que vous souhaitez inspecter dans la barre de recherche.

Grâce à ce rapport vous pouvez également :

- Inspecter une URL indexée : pour recueillir des informations sur la version indexée par Google de votre page.

- Inspecter en direct une URL : pour déterminer si une page de votre site peut être indexée en cliquant le bouton “tester l’URL en ligne” en haut à droite.

- Demander une indexation : pour demander à Google d’explorer une URL inspectée en cliquant sur “Demander une indexation” dans la première section du rapport.

Gérer les liens grâce à la Google Search Console

Gérez votre netlinking : Quels sites font des liens vers votre site ?

Pour cela, rendez-vous dans le menu dans l’onglet « Liens ».

Les informations sur le netlinking se trouvent dans la colonne de gauche nommée « liens externes ».

Vous trouverez 3 rapports :

- Principales pages de destination dans lequel vous aurez la liste des pages de votre site ayant des liens externes pointant vers elles.

- Principaux sites d’origine dans lequel vous aurez les liens externes renvoyant vers votre site.

- Principaux textes d’ancrage dans lequel sont présentés les ancres utilisées sur ces liens externes.

Vais-je voir tous mes liens ?

Tous les liens vers votre site ne sont pas forcément répertoriés. Cela est normal, pas d’inquiétude! Voici les raisons qui peuvent l’expliquer :

- Problème sur le robots.txt : Les données affichent le contenu détecté et exploré par Googlebot lors du processus d’exploration. Si une page de votre site est bloquée par un fichier robots.txt, les liens qui redirigent vers cette page ne sont pas répertoriés. Le nombre total de ces pages est disponible dans la section Exploration de l’onglet « URL bloquées (fichiers robots.txt) » dans la Search Console.

- Problème sur vos pages en 404 : Si un lien non fonctionnel ou incorrect est détecté sur votre site, il n’est pas répertorié dans cette section. >> Nous vous conseillons de consulter régulièrement la page Erreurs d’exploration afin de vérifier la présence d’erreurs 404 détectées par Googlebot lors de l’exploration de votre site, ce qui garantira que si un site externe fait un lien vers vous, vous bénéficierez bien de la transmission de popularité !

- Google ne l’a pas encore crawlé ! Eh oui, Google n’a peut être pas encore analysé la page du site externe qui fait un lien vers vous, auquel cas il n’a pas pu voir qu’elle faisait un lien vers vous ! Patience, ça va arriver vite!

- Votre site est peut-être indexé sous un https ou avec une autre version (avec ou sans www). Par exemple, si vous ne voyez pas les données de lien attendues pour http://www.example.com, assurez-vous que vous avez ajouté une propriété http://example.com à votre compte, puis vérifiez les données pour cette propriété.

?Pro tip: Pour limiter ceci, définissez un domaine favori

Gérez vos liens internes

Le nombre de liens internes qui redirigent vers une page permet aux moteurs de recherche de déterminer l’importance de cette page. Si une page importante est absente de la liste ou si une page moins importante présente un grand nombre de liens internes, il peut être utile de revoir la structure interne de votre site.

C’est bien beau toutes ces informations, mais comment les exploiter ?

-

Mettre en valeur une certaine page

Si vous souhaitez vous assurer qu’une page de votre site est bien maillée (qu’elle a de multiples liens internes depuis d’autres pages du site), vous pouvez vous en assurer via ce menu, c’est beau !

-

Supprimer ou renommer des pages

Si vous souhaitez supprimer ou renommer des pages de votre site, consultez auparavant ces informations afin d’identifier les éventuels liens non fonctionnels et d’éviter ce type de problème.

-

Si aucune information ne s’affiche dans ce rapport…

Cela signifie peut-être que votre site est récent et qu’il n’a pas encore été exploré. Dans le cas contraire, consultez le rapport sur les erreurs d’exploration pour savoir si Google a rencontré des problèmes lors de l’exploration de votre site.

Comment gérer l’ergonomie mobile de mon site ?

Le trafic Web mondial en provenance d’appareils mobiles est en pleine croissance. De plus, de récentes études montrent que les mobinautes sont plus susceptibles de revenir sur des sites adaptés aux mobiles. Google vous aide pour cela !

Pour cela, allez dans la section « Améliorations » du menu puis « Ergonomie mobile ».

Dans le graphique, retrouvez les problèmes d’ergonomie mobile détectés au fil du temps sur votre site.

Voici les différents problèmes que vous pouvez avoir :

- Utilise des plug-ins incompatibles

- Fenêtre d’affichage non configurée

- Fenêtre d’affichage non configurée sur « device-width », c’est à dire que la fenêtre ne peut pas s’adapter à différentes tailles d’écran.

- Texte illisible, car trop petit

Éléments cliquables trop rapprochés

Allez plus loin avec la Google Search Console

Comme l’ancienne Google Search Console est encore disponible, vous pouvez aller encore plus loins dans vos optimisations de site web grâce aux rapports suivants :

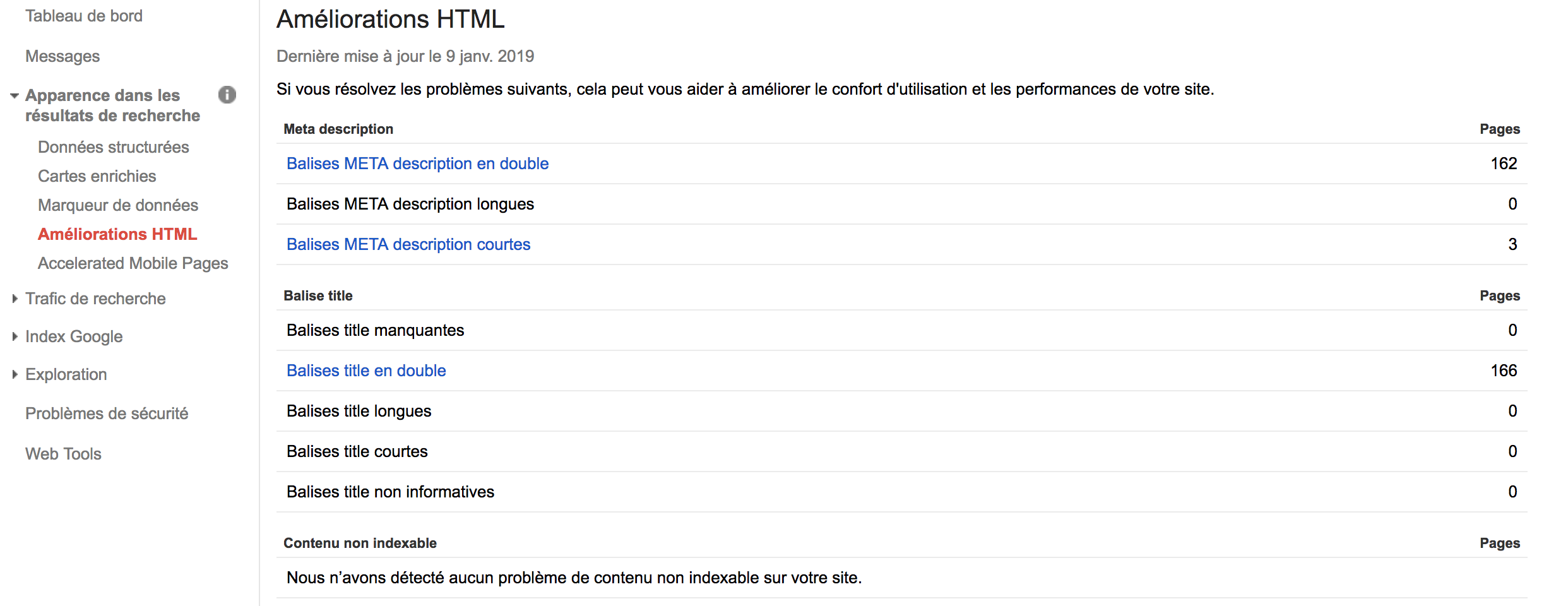

Améliorations HTML

Ce rapport présente les éventuelles difficultés rencontrées par Google lors de l’exploration et de l’indexation de votre site. Consultez-le régulièrement afin d’identifier les modifications susceptibles d’améliorer votre classement dans les pages de résultats de recherche Google, tout en garantissant un meilleur confort d’utilisation pour vos visiteurs. Par exemple, ci-dessous on peut voir qu’il y a des meta description en double.

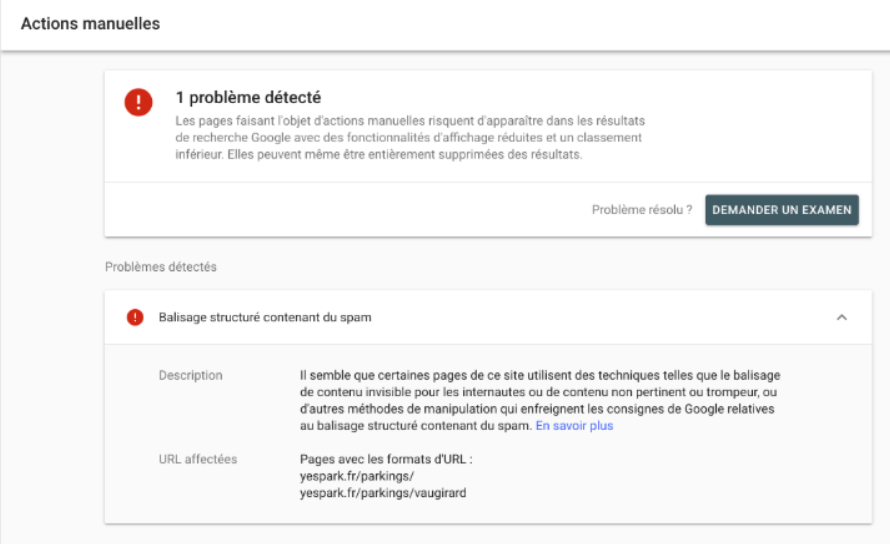

Actions manuelles

Ce rapport que l’on retrouve dans la nouvelle Google Search Console répertorie les problèmes connus sur votre site et fournit des informations pour vous permettre de les résoudre.Ici Google a détecté un problème.

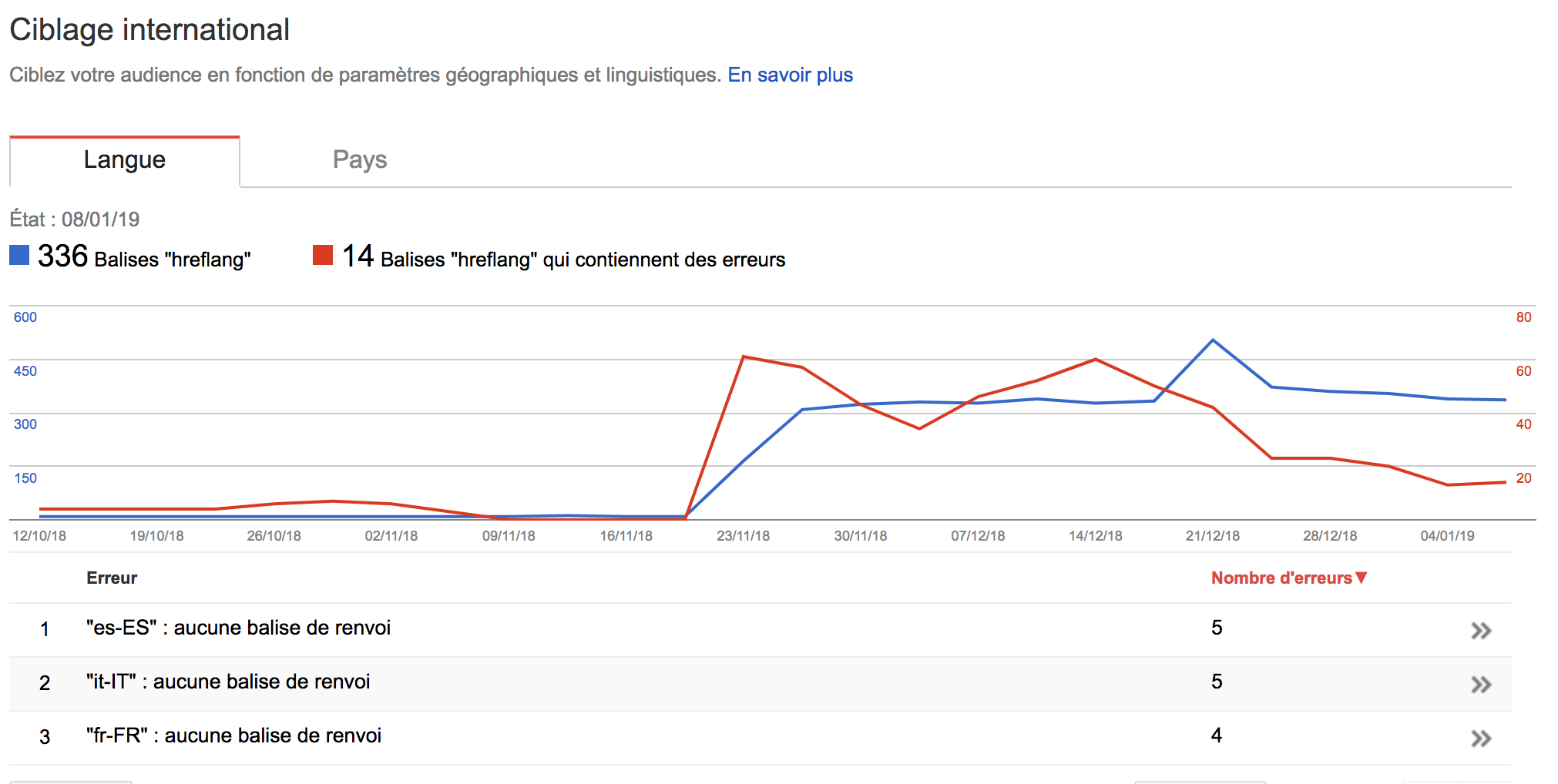

Ciblage international

Ce rapport vous permet de gérer un ou plusieurs sites web conçus pour plusieurs pays dans plusieurs langues; il convient de vous assurer que la version de vos pages qui s’affiche dans les résultats de recherche est appropriée tant au niveau du pays que de la langue.

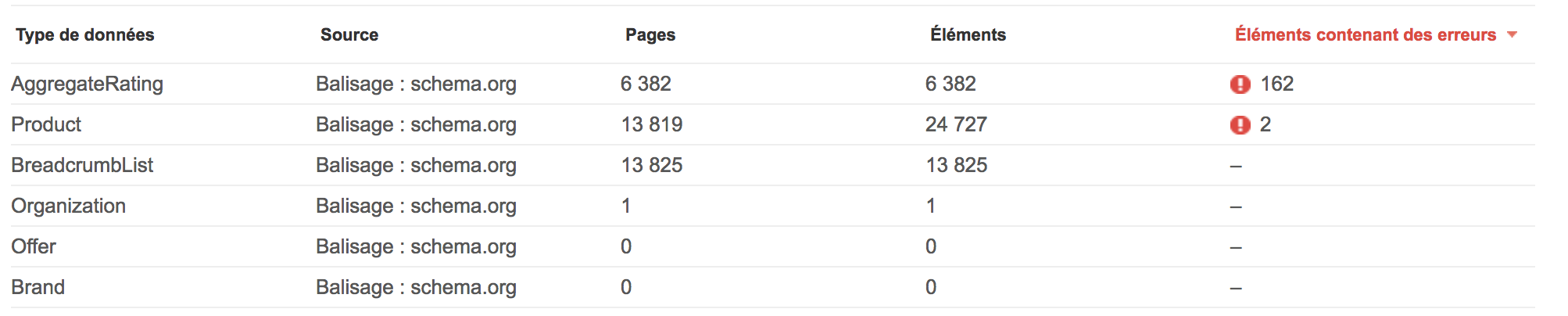

Données structurées

Ce rapport qui vous pouvez retrouver dans“Apparence dans les résultats de recherche > Données structurées”, vous donne la liste des URLs de votre site qui présentent un élément de donnée structurée : il est désormais possible de cliquer sur chaque URL pour savoir si Google a bien pris en compte votre balisage : (fantastique non??)

Marqueurs de données

“Apparence dans les résultats de recherche > Marqueurs de données” : c’est un outil qui permet à Google d’interpréter le format des données structurées sur votre site web.

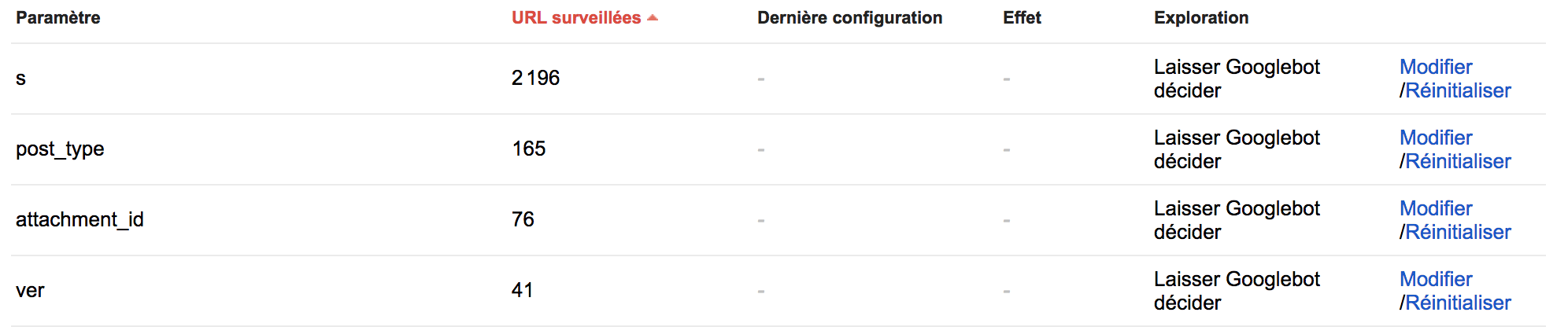

Paramètres d’URL

“Exploration > Paramètres d’URL” : ce rapport permet d’indiquer à Google à quoi servent les paramètres de votre site et comment les interpréter.

Conclusion

Toutes ces fonctionnalités expliquent pourquoi les webmasters s’accordent à dire que Google Search Console fournit un complément très intéressant à Google Analytics, avec une fois de plus le double objectif de parvenir à la fois à mieux comprendre d’où provient votre trafic et aussi à le travailler de manière plus ciblé.

What’s next ?

Vous venez de découvrir avec Smartkeyword comment utiliser search console découvrez dès maintenant comment ajouter un utilisateur sur Search Console.

Complétez votre lecture

Le guide complet pour utiliser la Google Search Console

Google Search Console : outil gratuit fourni par Google pour analyser la performance SEO de votre site web.